Scientific Computing

State-of-the-art computing facilities and expertise drive successful research in experimental and theoretical particle physics. Fermilab is a pioneer in managing "big data" and counts scientific computing as one of its core competencies.

For scientists to understand the huge amounts of raw information coming from particle physics experiments, they must process, analyze and compare the information to simulations. To accomplish these feats, Fermilab hosts high-performance computing, high-throughput (grid) computing, and storage and networking systems.

In the late 1980s, Fermilab computer scientists developed some of the first collections of networked workstations for use in high-throughput computing. Today Fermilab serves as one of two US computing centers that processes and analyzes data from experiments at the Large Hadron Collider. The worldwide LHC computing project is one of the world's largest high-throughput computing efforts.

Fermilab has an impressive capacity for storing data over the long term. The laboratory can store about 500 petabytes of data in total, which represents about 20 times the amount stored by all the LHC experiments in a year.

Hosting computing hardware is necessary but not sufficient to guarantee success. Fermilab also employs a team of computer science experts and particle physicists who specialize in computational techniques. They work to make these resources productive so the broader scientific community can concentrate on the science.

For example, Fermilab computer scientists contribute to and provide consulting support for the computer programs used to analyze particle physics data. These programs, mainly written in the C++ language, can run into millions of lines of code. As a result of this expertise, Fermilab has contributed to extensions in the C++ standard.

Our active cybersecurity protection program also ensures that our computational facilities and data are secure.

To meet the needs of the physics community, Fermilab leads several key scientific computing activities.

The US Lattice QCD Collaboration

Lattice QCD relies on high-performance computing and advanced software to provide precision calculations of the properties of particles that contain quarks and gluons. Fermilab is home to one of the US LQCD collaboration's high-performance computing sites, which uses advanced hardware architectures.

Advanced networking

High-throughput computing for international particle physics collaborations requires the ability to transport large amounts of data quickly around the world. Fermilab has long engaged in network R&D in support of its mission and today boasts 100-gigabit connectivity to local, national and international wide-area networks.

Open Science Grid

Fermilab is a founding member and remains a driver of the Open Science Grid Consortium. The OSG federates local, regional, community and national cyberinfrastructures to meet the needs of research and academic communities at all scales. Researchers from many scientific domains use the OSG infrastructure to access more than 120 computing and storage sites across the United States.

Accelerator modeling and simulation

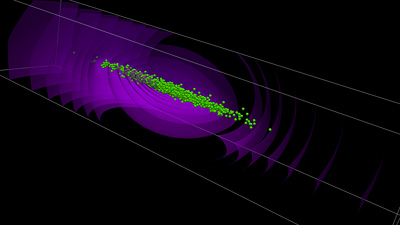

Fermilab works in collaboration with several national labs and universities to provide software packages that help scientists better understand the physics of beams, helping them make the most of their existing machines and plan the next generation of accelerators.

Learn more about Fermilab's Computing Sector.

- Last modified

- 05/09/19

- email Fermilab