|

FSPA officer elections open until Oct. 1

English country dancing Oct. 4 and 25 at Kuhn Barn and special workshop Oct. 15

NALWO evening social - Oct. 7

Process Piping Design; Process Piping, Material, Fabrication, Examination, Testing - Oct. 13, 14, 15, 16

Python Programming Basics - Oct. 14, 15, 16

Interpersonal Communication Skills - Oct. 20

Access 2013: Level 2 / Intermediate - Oct. 21

Excel 2013: Level 2 / Intermediate - Oct. 22

Managing Conflict (morning only) - Nov. 4

PowerPoint 2013: Introduction / Intermediate - Nov. 18

Python Programming Advanced - Dec. 9, 10, 11

Professional and Organization Development 2015-16 fall/winter course schedule

Outdoor soccer

Scottish country dancing Tuesdays evenings at Kuhn Barn

International folk dancing Thursday evenings at Kuhn Barn

Norris Recreation Center employee discount

|

|

Goodbye, Central Helium Liquefier

|

This aerial shot shows the L-shaped Central Helium Liquefier building. The row of orange tanks store helium gas and are known by the CHL crew as the tank farm. Photo: Reidar Hahn |

It's time to say goodbye to a beloved assemblage of machinery: In July, the Central Helium Liquefier produced its last batch of liquid helium.

The CHL ran continuously during the Tevatron's operation from 1983 to 2011. Following 4.5 miles of piping to 24 satellite refrigerators around the Main Ring, liquid helium flowed over the Tevatron's magnets to keep them at superconducting temperature — about minus 450 degrees Fahrenheit.

"We relied heavily on the CHL to run the Tevatron," said Jay Theilacker, the Accelerator Division Cryogenics Department head. "And for all those decades it was incredibly reliable."

In the CHL, helium glided through a series of pipes, gigantic compressors, coldboxes and turbines that cooled it from gas to liquid. When running, the massive compressors shook the entire building, forming waves in coffee cups during meetings.

Thanks to the CHL's supply of liquid helium, electrical current flowing through the Tevatron's magnets felt no resistance as it created magnetic fields to steer the Tevatron beam. The CHL also helped rapidly cool quenched magnets, which allowed the Tevatron to resume beam operations.

"It was the lifeblood of the Tevatron," said Jerry Makara, former CHL head. "When we went down, everybody was waiting for us to get back up."

The CHL was so vital to the Tevatron's operation that Fermilab built a second, redundant liquefier in the 1980s. When something in the first liquefier went awry, crews could bring the second liquefier online within 24 hours. These two helium liquefiers remained the world's largest until recently.

At the heart of the CHL was a crew of dedicated staff that manned it 24/7, working 12-hour shifts. Makara recalls the late Ron Walker, CHL head before him, as the "spirit of the CHL." The entire CHL crew, both operators and supervisors, emulated Walker's devotion, including Mike Hentges, Gary Hodge and Bob Kolar. When the CHL was shut down with the Tevatron, some crew members retired, and others moved on to other jobs within the laboratory.

"One thing that was true for all of us after the shutdown: We appreciated getting a good night's sleep," Makara said.

The CHL restarted a couple of years ago to test superconducting magnets for first the MICE experiment, based in the UK, and then Mu2e. Makara said the CHL's reliable reputation cracked — quite literally for one compressor — during this "last hurrah." Many parts broke, and the number of functional compressors dwindled from four to one.

"Once you shut things down, it's never quite the same when you start it back up again," Theilacker said. "We were really on our last legs here."

So Fermilab decommissioned the CHL, shutting it down for good this time. The CHL building will be gutted, and salvaged equipment will be distributed among divisions. There's no verdict yet on who will move in next.

—Chris Patrick

|

Computing Technology Day - Tuesday, Oct. 6

Fermilab Computing will host a Computing Technology Day in Wilson Hall on Tuesday, Oct. 6, from 9 a.m. to 1 p.m. An industry exhibit featuring 20 companies will be on display in the atrium along with the following technical presentations in One West:

9:30-10:20 a.m.: Challenges in the Internet of Things

10:30-11:20 a.m.: Alone in the Dark: DevOps Primer for People Who Care About Security

11:30 a.m.-12:20 p.m.: Data Security and Integrity Precautions Incorporated into General Purpose SAN/Disk-Storage Solutions

This is an opportunity to learn about the latest advances in cloud computing, cybersecurity, big data, computer network, hardware and software. All Fermilab employees and users are welcome to attend. No registration is required.

|

In memoriam: Stanley Tawzer

Former Fermilab employee Stanley Tawzer, 82, passed away on Sept. 25. Tawzer worked at Fermilab for more than 30 years.

A visitation will be held on Thursday, Oct. 1, from 11 a.m. until the time of service at 1 p.m. at Cornerstone Christian Church, 312 Geneva Road, Glen Ellyn, Illinois. There will also be an interment at Wheaton Cemetery. More information is available at the Williams Family Funeral Homes website.

|

China's great scientific leap forward

From The Wall Street Journal, Sept. 24, 2015

Chinese President Xi Jinping's visit to Washington is an excellent opportunity to recognize China's scientific contributions to the global community, and to foster more cooperation between the U.S. and China in many areas of science, especially particle physics.

The discovery of the Higgs particle at Europe's Large Hadron Collider in 2012 began a new era. It confirmed an essential feature of the 40-year-old Standard Model of particle physics, a missing ingredient that was needed to make the whole structure work.

Read more (login required)

|

|

HEPCloud: computing facility evolution for high-energy physics

|

|

Panagiotis Spentzouris |

Panagiotis Spentzouris, head of the Scientific Computing Division, wrote this column.

Every stage of a modern high-energy physics (HEP) experiment requires massive computing resources, and the investment to deploy and operate them is significant. For example, worldwide, the CMS experiment uses 100,000 cores. The United States deploys 15,000 of these at the Fermilab Tier-1 site and another 25,000 cores at Tier-2 and Tier-3 sites. Fermilab also operates 12,000 cores for muon and neutrino experiments, as well as significant storage resources, including about 30 petabytes of disk and 65 petabytes of tape, served by seven tape robots and fast and reliable networking.

And the needs will only grow from there. During the next decade, the intensity frontier program and the LHC will be operating at full strength, while two new programs will come online around 2025: DUNE and the High-Luminosity LHC. Just the increased event rates and complexity of the HL-LHC will push computing needs to approximately 100 times more than current HEP capabilities can handle, generating exabytes (1,000 petabytes) of data!

HEP must plan now on how to efficiently and cost-effectively process and analyze these vast amounts of new data. The industry trend is to use cloud services to reduce the cost of provisioning and operating, provide redundancy and fault tolerance, rapidly expand and contract resources (elasticity), and pay for only the resources used. Adopting this approach, U.S. HEP facilities can benefit from incorporating and managing "rental" resources, achieving the "elasticity" that satisfies demand peaks without overprovisioning local resources.

The HEPCloud facility concept is a proposed path to this evolution, envisioned as a portal to an ecosystem of computing resources, commercial and academic. It will provide "complete solutions" transparently to all users with agreed-upon levels of service, routing user workflows to local (owned) or remote (rental) resources based on efficiency, cost, workflow requirements and target compute engine policies. This concept takes to the next level current practices implemented "manually" (for example, balancing the load between intensity frontier and CMS local computing at Fermilab and using sites of the Open Science Grid). HEPCloud could provide the means to share resources in the ecosystem, potentially linking all U.S. HEP computing.

In order to demonstrate the value of the approach and better understand the necessary effort, in consultation with the DOE Office of High Energy Physics, we recently started the Fermilab HEPCloud project. The goal is to integrate "rental" resources into the current Fermilab facility in a manner transparent to the user. The project aims to develop a seamless user environment, architecture, middleware and policies for efficient and cost-effective use of different resources, as well as information security policies, procedures and monitoring.

The first type of external resource being implemented is the commercial cloud from Amazon. Working with the experiments, we have identified use cases from CMS, DES and NOvA to demonstrate necessary key aspects of the concept. The project, led by Robert Kennedy and Gabriele Garzoglio (project managers) and Anthony Tiradani (technical lead), is making excellent progress, preparing to deploy the first use case (CMS simulation), working closely with CMS and Open Science Grid. Expected to go into production in the next couple of months, it will provide information on scalability, availability and cost-effectiveness.

High-performance computing facilities present an appealing possibility to be considered as a potential external resource for HEPCloud. The capacity of current supercomputers operated by the DOE Office of Advanced Scientific Computing Research is more than 10 times larger than the total of HEP computing needs. The plans for new supercomputers aim to continue this trend. Work is under way by HEP experiments to identify use cases for such use within the constraints of allocation, security and access policy of high-performance computing facilities. We are actively approaching experts from these facilities to collaborate on this.

HEP computing has been very successful in providing the means for great physics discoveries. As we move into the future, we will work to develop the facilities to enable continued discovery — efficiently and cost-effectively. We believe the HEPCloud concept is a good candidate!

|

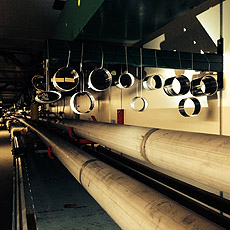

Hanging rings in the ring

|

| Members of the Mechanical Support Department are testing samples of different types of stainless steel and postwelding treatment to determine which will best stand up to corrosion in the Main Injector environment. They conduct these tests in anticipation of higher-intensity beams from the accelerator. Kris A. Anderson, AD, made this set of 10 samples, installed at the 311 region of the Main Injector. He also installed two other sets — another in the Main Injector ring and a third set outside it. Photo: Jesse Batko, AD

|

|

ESH&Q weekly report, Sept. 29

This week's safety report, compiled by the Fermilab ESH&Q Section, contains two incidents.

An employee's hammer slipped and hit his head, causing a laceration. He received first aid treatment. He later received a tetanus shot and sutures in the emergency room. This case is recordable. An investigation is pending.

A contractor attempted to adjust the jammed wheels of the leak detector while loading it onto a truck. Another contractor pushed from the other side, causing the hitch to hit him in the eye. He suffered a laceration to the eyelid and blunt force trauma to the eyeball. An investigation is pending.

See the full report.

|

|